The General Data Protection Regulation (GDPR) came into effect in May 2018 and has significant implications for the handling of personal data. It was designed to protect the privacy of European Union (EU) citizens and to ensure that their personal data is handled securely and with transparency.

One area that is often overlooked is the handling of test data. Test data is used for a variety of purposes, including software development, quality assurance, and training. However, this data often contains personal information and must be handled with the same level of care and consideration as production data.

In this article, we will explore recommended practices for handling test data in the context of GDPR compliance. How to identify personal data, storing and deleting data, and how to ensure that data is secure and protected.

By following these guidelines, organizations can ensure that they are fully compliant with GDPR regulations and are handling test data in a responsible and ethical manner.

What Is Considered Personal Data?

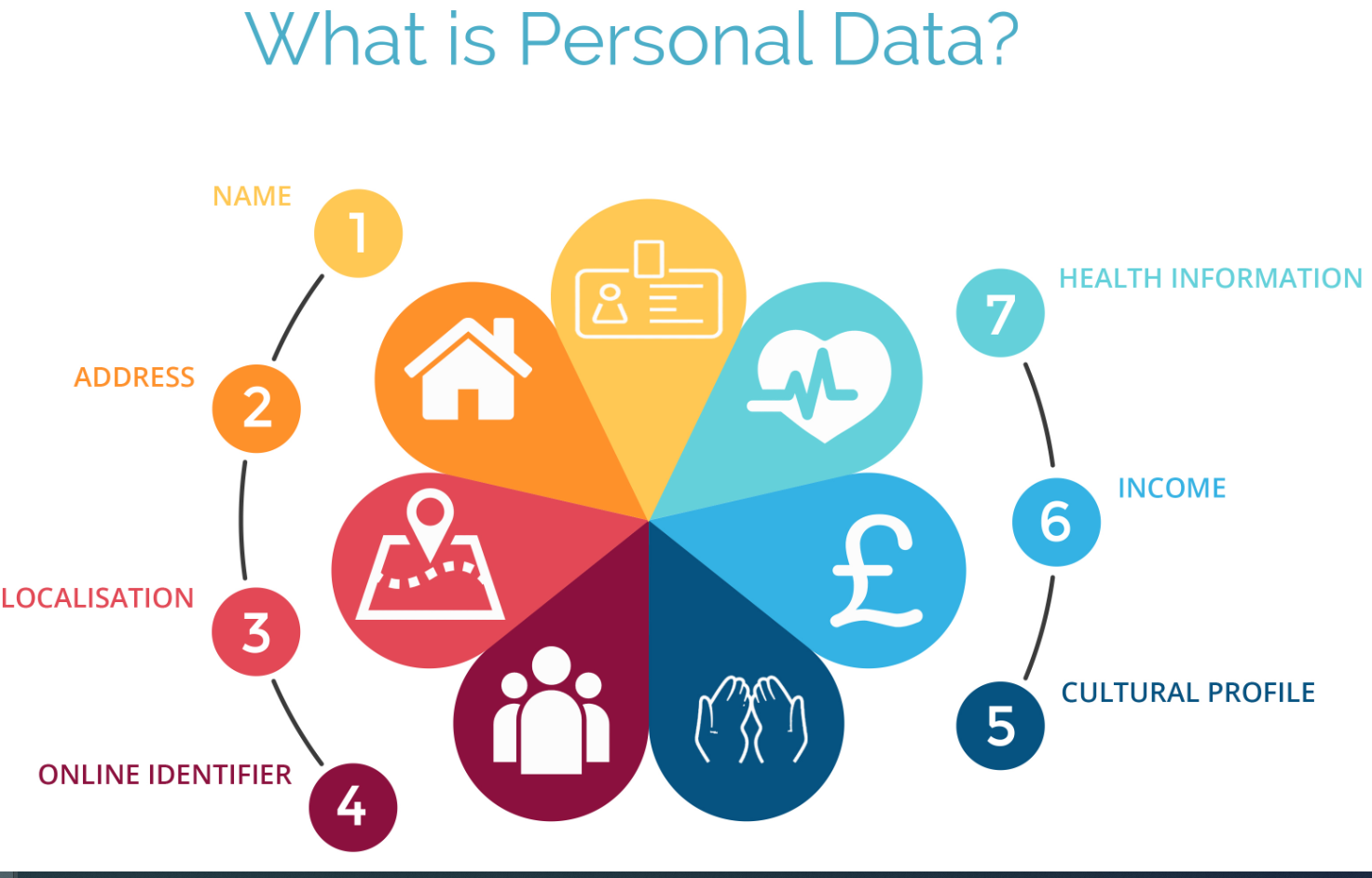

While it may be easy to say that GDPR regulation refers to personal data. It is not really clear what that data is. Of course my name and date of birth are personal data, but how about my email address, what’s on my blog, or my make and model of car? Before we continue, let us define what is considered personal data from the GDPR perspective.

The GDPR defines personal data as any information that can directly or indirectly identify an individual.

This includes data such as name, address, date of birth, phone number, email address, and social security number. It also includes sensitive information such as race, ethnicity, religion or health data.

In the context of test data, you might find personal data in test cases, test scripts, test data sets, and other materials used in software development and testing. Often it’s been copied into test regions from production.

It's important to note that personal data in test data is subject to the same rules and regulations as personal data in production data, and must be handled in accordance with GDPR guidelines.

Just Generate The Data Yourself?

Since the topic of using personal data in testing and test cases seems to be very sensitive and rather complicated, you might ask: Why not generate the data myself?

This is a valid point, since there are many tools that can generate data in the correct format for personal data. These tools can be used to store data for later use (Data Integrity Tools) or to generate data based on test ideas you might have during a testing session (What Tools Should I Learn). While most tools would help you generate a lot of data for testing, the process can be time consuming and at times it won’t deliver the expected results. This is true especially if the application under test is complex and if there need to be connections between the generated data.

But wait, can’t we use artificial intelligence (AI) to generate the data? Right now there is an AI system for just about everything, and the most well known AI system on the market is ChatGPT. I actually asked ChatGPT this very question.

According to ChatGPT, while AI can be used to generate test data, it's important to validate and test AI-generated test data to ensure that it accurately reflects the real-world scenarios that it is intended to replicate. Additionally, any personal data included in the test data should be anonymized or removed to comply with GDPR regulations.

No Data Like Production Data

Even if we can generate data via tools and AI, the best test data will always be your production data. This is because it provides all combinations of situations and user data that are currently in the production environment. As a result, you can cover a lot of real world scenarios and minimize bugs.

However, since GDPR was enacted, you cannot just copy the production data to a test or integration system. But since there is no data like production, there needs to be a way to copy the production data and still be GDPR compliant. Fortunately, there is, and it is called data anonymization.

Data anonymization is the processing of data with the aim of irreversibly preventing the identification of the individual. In other words, for data to be fully anonymized, it must be impossible to connect to an individual person..

There are several techniques that can be used to anonymize test data:

- Masking: Replacing sensitive data with a generic value, such as "****" for credit card numbers or "XXX-XX-XXXX" for social security numbers. This technique can preserve the structure of the data, but may limit its usefulness, especially when it comes time to distinguish one value from the other.

- Perturbation: Adding random noise to the data, such as adding or subtracting a small amount from each data point. This can preserve the statistical properties of the data, but may affect its usefulness for analysis. For example, you might have a dataset representing customer ratings for a restaurant. Each data point represents a rating on a scale of 1 to 5: Original Data:[4, 3, 5, 2, 4]. Now, let's apply perturbation by adding or subtracting random noise between -0.5 and +0.5 to each rating: Perturbed Data:[4.3, 2.8, 4.9, 1.7, 3.9]. If you want to calculate the average rating for the restaurant in the original data, the average rating is 3.6. However, in the perturbed data, the added noise might shift the ratings slightly, resulting in a different average.

- Generalization: Removing specific details from the data to make it less specific, such as removing the exact date of birth and only keeping the year. This technique can preserve the overall trends in the data but may affect its usefulness for detailed analysis.

- Anonymization through aggregation: Combining data from multiple individuals into a single record to make it impossible to identify any individual. This can preserve the overall statistics of the data but may not be useful for detailed analysis of individual records.

Now depending on the context you can use one or multiple techniques. Employing multiple anonymization techniques can reduce the risk of re-identification and provide more robust protection for personal data.

Regardless of the approach taken, there are some important aspects to take into account. Firstly you need to make sure that anonymized data cannot be re-identified. You do this by removing any unique identifiers or other information that could be used to link the data to specific individuals. In addition, you need to document the anonymization process for auditing and compliance purposes, including the techniques used and the rationale for their selection.

Retention And Disposal Of Test Data

Apart from rules for collecting and using personal data, GDPR also has regulations that define retention and disposal of data.

GDPR requires that personal data not be kept in a form which permits identification of individuals for any longer than is necessary for the purposes for which the personal data are processed.

The wording “in a form which permits identification” refers to the possibility of retaining data which has been fully anonymised. So if you have performed a full anonymization of your test data, the obligation to retain personal data only for so long as is necessary does not apply. However, if an organization retains anonymised data on this basis, they should keep its identifiability status under continuous review

Even if there are no legal requirements in regards to retention and deletion of fully anonymized test data, it is still recommended that organizations establish clear retention and disposal policies for anonymized test data. This is to ensure that the data is being stored and disposed of securely, in accordance with best practices. This can help organizations protect the confidentiality, integrity, and availability of the data, and minimize the risk of data breaches or other security incidents.

Besides GDPR requirements, organizations should also consider any other legal or regulatory requirements that may apply to the disposal of anonymized test data, such as industry-specific standards or contractual obligations. In some cases, contractual obligations may require the secure disposal of anonymized data, even if it is not considered personal data under the GDPR.

Conclusion

Handling test data in accordance with GDPR requirements is essential for ensuring the privacy and security of individuals' personal information. To comply with GDPR, organizations must take steps to protect personal data throughout the entire testing process, from acquisition and storage to use and disposal. This includes conducting data protection impact assessments, establishing clear retention and disposal policies, and implementing appropriate technical and organizational measures to safeguard personal data.

Anonymization is an effective technique for protecting personal data during testing, but it does not exempt organizations from GDPR requirements related to retention and disposal of personal data. Even if test data has been fully anonymized, organizations must still establish clear retention and disposal policies for the data and ensure that these policies are being followed in practice.

In addition to compliance with GDPR requirements, handling test data responsibly can help organizations build trust with their customers and stakeholders, as well as avoid legal and reputational risks associated with data breaches or other security incidents. By adopting best practices for handling test data, organizations can demonstrate their commitment to protecting individuals' privacy and maintaining high standards of data protection.

Overall, handling test data in compliance with GDPR requirements requires ongoing attention and effort from organizations. By taking a proactive approach to data protection, implementing appropriate safeguards, and regularly reviewing and updating their policies and procedures, organizations can ensure that they are effectively managing the risks associated with testing and protecting the privacy and security of personal data.

References

- What Is GDPR?

- Anonymization and GDPR compliance; an overview

- Definition of “personal data” under the GDPR